A friend of mine shares an email thread from his organization discussing the definition of CUSTOMER, disagreeing as to which categories of stakeholder should be included and which should be excluded.

Why is this important? Why does it matter how

the CUSTOMER label is used? Well, if you are going to call yourself a

customer-centric organization, improve customer experience and increase

customer satisfaction, it would help to know whose experience, whose

satisfaction matters. And how many customers are there actually?

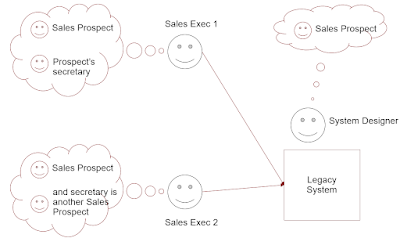

The organization provides services to A, which are experienced by B and paid for by C, based on a contractual agreement with D. This is a complex network of actors with overlapping roles, and the debate is about which of these count as customers and which don't. I have often seen similar confusion elsewhere.

My friend asks: Am I supposed to have a different customer definition for different teams (splitter), or one customer definition across the whole business (lumper)? As an architect, my standard response to this kind of question is: it depends.

One possible solution is to prefix everything - CONTRACT CUSTOMER, SERVICE CUSTOMER, and so on. But although that may help sort things out, the real challenge is to achieve a joined-up strategy across the various capabilities, processes, data, systems and teams that are focused on the As, the Bs, the Cs and the Ds, rather than arguing as to which of these overlapping groups best deserves the CUSTOMER label.

Sometimes there is no correct answer, but a best fit across the board. That's architecture for you!

Many business concepts are not amenable to simple definition but have fuzzy boundaries. In my 1992 book, I explain the difference between monothetic classification (here is a single defining characteristic that all instances possess) and polythetic classification (here is a set of characteristics that instances mostly possess). See also my post Modelling Complex Classification (February 2009).

But my friend's problem is a slightly different one: how to deal with multiple conflicting monothetic definitions. One possibility is to lump all the As, Bs, Cs and Ds into a single overarching CUSTOMER class, and then provide different views (or frames) for different teams. But this still leaves some important questions open, such as which of these types of customer should be included in the Customer Satisfaction Survey, whether they all carry equal weight in the overall scores, and whose responsibility is it to improve these scores.

In her book on medical ontology, Annemarie Mol develops Marilyn Strathern's notion of partial connections as a way of overcoming an apparent fragmentation of identity - in our example, between the Contract Customer and the Service Customer - when these are sometimes the same person.

Being one shapes and informs the other while they are also different identities. ... Not two different persons or one person divided into two. But they are partially connected, more than one, and less than two.

Mol pp 80-82

Mol argues that frictions are vital elements of wholes

,

... a tension that comes about inevitably from the fact that, somehow, we have to share the world. There need not be a single victor as soon as we do not manage to smooth all our differences away into consensus.

Mol p 114

Mol's book is about medical practice rather than commercial business, but much of what she says about patients and their conditions applies also to customers. For example, there are some elements that generally belong to "the patient", and although in some cases there may be a different person (for example a parent or next-of-kin) who stands proxy for the patient and speaks on their behalf, it is usually not considered necessary to mention this complication except when it is specifically relevant.

Similar complexities can be found in commercial organizations. Let's suppose most customers pay their own bills but some customers have more complicated arrangements. It should be possible to hide this kind of complexity most of the time.

Human beings can generally cope with these elisions, ambiguities and tensions in practice, but machines (by which I mean bureaucracies as well as algorithms) not so well. Organizations tend to impose standard performance targets, monitored and controlled through standard reports and dashboards, which fail to allow for these complexities. My friend's problem is then ultimately a political one, how is responsibility for "customers" distributed and governed, who needs to see what, and what consequences may follow.

(As it happens, I was talking to another friend yesterday, a doctor, about the way performance targets are defined, measured and improved in the National Health Service. Some related issues, which I may try to cover in a future post.)

Annemarie Mol, The Body Multiple: Ontology in Medical Practice (Duke University Press 2002)

Richard Veryard, Information Modelling - Practical Guidance (Prentice-Hall

1992)

- Polythetic Classification Section 3.4.1 pp 99-100

- Lumpers and Splitters Section 6.3.1, pp 169-171